I haven’t been posting much here lately. Things have been keeping me busy enough that I haven’t really had much time to ponder what to put here.

One thing I’ve been really busy with has been our robotics team. I’ve posted about it here before and although there is lots more I could say about the whole program, this is the main aspect I’ve been personally involved with. Well, one of them…

|

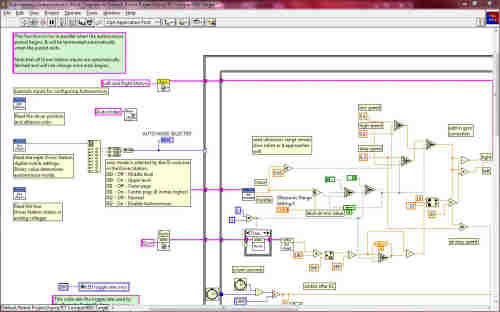

| A sample of the upper-left corner of one of four autonomous routines for the Warlocks robot. This determines what the robot does during the part of the match it operates on it’s own. This is one corner of one of four Autonomous modes. It doesn’t even fit on the computer screen all at once. The Teleop code that runs the robot when the drivers take over is just as complex. |

My role on the team is primarily the programming and electrical design and wiring. I don’t do it alone, in fact, it’s better if more people are involved. I have another Mentor with an engineering background and two really sharp students who are also working on this. But I end up doing most of the programming to bring it all together. My hope is to get the others up to speed enough to have the students take the lead on it next year.

We have been using a programming “language” called LabView, provided by National Instruments for three years now. It’s no exaggeration to say we are still learning to use it.

In the past, we programmed a small robot controller in ANSI C. It was a proprietary system based on some Microchip PIC processors. When they changed the control system to a much more powerful National Instruments Compact RIO system, we had to make a choice – continue on with C or try out this new LabView thing.

At the time, we didn’t know much about it, but knew the Lego League teams were using a thing called a NXT controller and were programming it with a simplified programming tool similar to LabView.

|

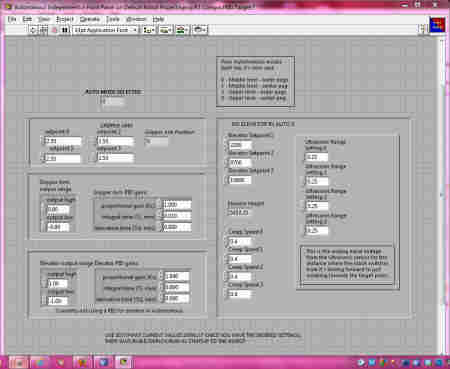

This is the Front Panel of our autonomous program. It’s sort of a configuration screen that lets us set up some of the parameters while programming. |

My hope was to find that kids would come into the team with some experience from the Lego League teams and teach me. It didn’t happen at first and the first year with the new controller was harrowing, but we did manage to make a functional program. We actually still have that robot and are still using it for program development. The original code has been completely rewritten once and probably will be again if we keep it for demos.

The second year, we did a bit better. They made some improvements to the software libraries and it got a bit easier to program. They also switched from a piece of hardware for the driver station – where the students plug in joysticks and drive the robot – to a software solution that runs on a small laptop.

We thought we would have it easy this year. After all, we had two years experience with the programming language. Not so. We learned enough to get ourselves in over our heads. We were plagued with mysterious problems all season that slowed our programming down to a crawl. We found out that we now are able to push the poor controller to it’s limits and are fighting to keep things running fast enough that it doesn’t bring it to a halt. Unfortunately, with no prior experience with this sort of problem, we struggled for a long time, not knowing where to turn.

We did manage to get through a regional competition in Pittsburgh. We had some problems, but thankfully, we still were able to compete effectively. We had one autonomous routine that worked. We would have like to have more, but didn’t have time to finish them. Despite all that and the normal mechanical issues that always come up, we managed to be ranked 2nd and head up the alliance that finished with Silver medals.

We were beat for the Gold by our old friends and neighbors from St. Catherines, the Simbotics, Team 1114. They’re the same team we finished right behind two years prior in Toronto, so history repeated itself. They’re always a powerhouse.

I’ve got to give credit to our mechanical guys. The execution on our robot as far as a design that works and plays the game effectively is the best we’ve ever had. It’s simplicity is that kind of simplicity that is just elegant. It works and works fast. The machine work is impressive for something 90% done in the school shop by students. You see a lot of “works of art” at competitions that you just know were made in professional machine shops, not by students. Not ours, but it measures up where it counts, on the field.

Anyway, since the regional, we’ve been thinking about our program and trying to figure out how to perfect it without the robot. You see, the robot is out of our hands once the six-week build period is done. We had to seal it up in a large plastic bag in March and take it to the competition still sealed. Once the competition ended, it went back into the bag and was re-sealed. It then goes to the Championships in St. Louis at the end of April. For that, it must be shipped in a crate. And it must be crated and given over to the shipping company by the Tuesday following the competition. So it was back to Lockport, put it in the crate – bag and all – and on the truck.

So how do you test the programming without the robot? We had two other robots with the same controller, but they were different configurations. One had a mecanum drive system, which was nothing like the tank drive system on the current robot. The other had a similar drive, but slippery, hard plastic wheels from the year with the regolith field. It also had nothing like the arm and elevator manipulator on this years robot, but it did have many motor-driven rollers that could easily run without doing any harm. We decided that just running the motors would be enough of a simulation without the actual elevator and arm.

We control the arm’s angle with a motor and read it’s position with a potentiometer. Simple and effective. It’s the first time we’ve done that and had it work. It actually works very well and uses a PID Loop.

The Elevator is belt-driven and we used an encoder to tell us how far it has gone up. Another first for us. We tried encoders on the drivetrain before, but never got them to work. We couldn’t get the PID Loop to work 100%, but it works fine with just some simple logic that stops it when it’s close to the requested value. There’s so much extra resolution on the encoder that if we’re off by 100 counts, it doesn’t matter.

So we hooked a spare pot and encoder up to the old robot’s rollers. We also are using an Ultrasonic Range sensor to tell us how far from the wall at the end of the field we are. It works surprisingly well. Another first. Another thing we used was a gyro sensor to tell the direction heading we are facing. We managed to get it to help us drive straight during the autonomous.

So with all that on the old robot to make it act like the new one, as far as the program was concerned, we’ve been trying to exorcise the demons out of our code. We’ve been able to make enough improvements to get the four autonomous modes we want working. The processor load is still high, but somewhat improved. We’ve solicited help and advice from several sources and are waiting to hear back from an expert at NI who is looking over our program.

Hopefully, by the time we leave for Chamionships, everything will be ricky-tick-tick, as an engineer I once knew would say.

We have a few electrical issues and things to fix as well, so there will be a lot of work to do in the pits there.

Anyway, that’s been one of the major things eating up my time here. My bike trip is still in the planning stages and I’ve been working on the solar power system as well. More on that soon.